In the ever-evolving world of storage solutions, the emergence of RAM-cached drives has introduced a new paradigm. These drives, blending the speed of RAM with the storage capacity of traditional drives, promise unprecedented performance. However, to navigate this promising but complex terrain, one must comprehend relative performance – the compass guiding us through the intricacies of RAM cached drives. This article delves into RAM caching, deciphers performance metrics, and unveils real-world impacts. Whether you’re a tech enthusiast or IT professional, understanding relative performance in RAM cached drives is key to unlocking their full potential.

The Basics of RAM Cached Drives

Relative Performance: A Key Metric

The Impact on System Performance

The Basics of RAM Cached Drives

A. Defining RAM Cached Drives

RAM cached drives, also known as cache hybrid drives, represent a revolutionary fusion of traditional storage technology with the speed and efficiency of random-access memory (RAM). These innovative drives utilise a portion of your system’s RAM as a cache, creating a high-speed bridge between your data and the primary storage device, typically a solid-state drive (SSD) or hard disk drive (HDD). This integration aims to provide a seamless balance between storage capacity and rapid data access, redefining the storage landscape.

B. How RAM Caching Works

RAM caching is a dynamic intermediary between your computer’s main memory (RAM) and the primary storage medium. When you access data or applications, frequently used files and processes are temporarily stored in the RAM cache. This allows your system to retrieve data faster than directly from the primary storage drive. Complex algorithms manage what data is cached and for how long, ensuring optimal performance.

C. Advantages of RAM Caching

The advantages of RAM caching are manifold. First and foremost, it significantly accelerates data access speeds, reducing application load times, boot times, and file retrieval. Additionally, RAM caching minimises wear and tear on the primary storage drive, extending its lifespan. It also enhances multitasking capabilities, as data retrieval from the cache is nearly instantaneous. These benefits collectively contribute to a more responsive and efficient computing experience.

D. Common Use Cases

RAM-cached drives find utility across various computing scenarios. Everyday use cases include:

Gaming Enthusiasts: Gamers benefit from reduced loading times, seamless gameplay, and rapid level loading, enhancing their gaming experience.

Content Creators: Video and multimedia professionals experience accelerated editing and rendering processes, improving workflow efficiency.

Businesses and Enterprises: Enterprise-level applications, databases, and servers benefit from improved data access, ensuring smooth operation and reduced downtime.

Casual Users: Everyday users enjoy snappier application launches, swift file access, and a more responsive computing environment.

Understanding these fundamental aspects of RAM cached drives lays the groundwork for appreciating their profound impact on system performance. In the following sections, we will delve deeper into the critical relative performance metric and how it relates to these innovative storage solutions.

Relative Performance: A Key Metric

A. Defining Relative Performance

Relative performance is a crucial metric that gauges the effectiveness and efficiency of a system, component, or technology in comparison to other alternatives. In the context of RAM-cached drives, it provides insight into how they perform concerning traditional storage solutions, such as standalone SSDs or HDDs. This measurement is valuable for evaluating the real-world benefits and trade-offs of adopting RAM caching technology.

B. Why Relative Performance Matters

Relative performance is paramount because it helps users make informed decisions when selecting storage solutions. Understanding how RAM-cached drives perform in relation to other storage options allows individuals and organisations to assess whether the speed and efficiency gains outweigh potential drawbacks. This knowledge empowers consumers and IT professionals to make choices that align with their specific needs and priorities, whether enhanced gaming experiences, faster data processing, or improved server performance.

C. How to Measure Relative Performance

Measuring relative performance involves assessing various performance metrics to compare different storage solutions effectively. Key metrics used for this purpose include:

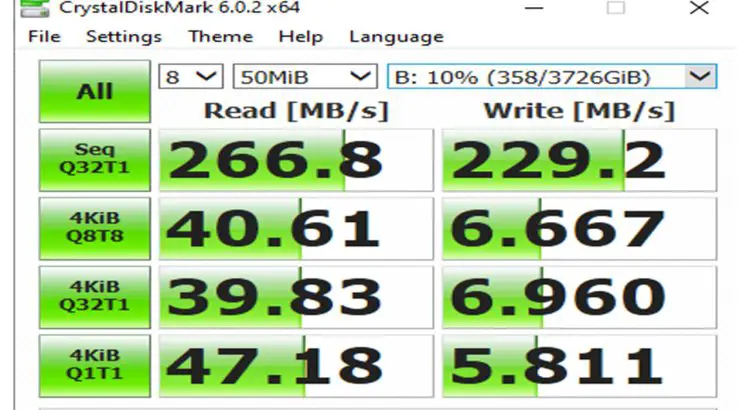

Throughput and Latency: Throughput measures the amount of data a storage system can transfer in a given time, while latency quantifies the delay between a data request and its delivery. Lower latency and higher throughput are generally indicative of better performance.

IOPS (Input/Output Operations Per Second): IOPS measures how many read and write operations a storage device can handle in one second. Higher IOPS values typically denote improved performance.

Benchmarking Tools: Specialized software tools and benchmarking tests are used to simulate real-world scenarios, allowing users to evaluate the performance of different storage solutions under specific conditions.

D. Factors Affecting Relative Performance

Several factors can influence the relative performance of RAM-cached drives compared to other storage options:

Drive Capacity: The capacity of the primary storage drive (e.g., SSD or HDD) in a RAM-cached drive configuration can impact its overall performance. Smaller primary drives may lead to more frequent cache evictions and reduced performance benefits.

RAM Size: The amount of RAM allocated for caching plays a significant role. Greater RAM capacity allows more data to be cached, potentially resulting in improved performance.

Data Access Patterns: The nature of the data and how frequently it is accessed can affect caching effectiveness. Highly repetitive or frequently accessed data benefits more from caching.

Workload Intensity: The workload’s demands, such as gaming, content creation, or server operations, can influence how much performance improvement is observed with RAM caching.

Understanding these factors and how they interact with relative performance metrics is crucial for making informed decisions about adopting RAM-cached drives and optimising their configuration for specific use cases. In the subsequent sections, we will explore how to detect the presence of RAM caching in your system, the impact of RAM caching on overall system performance, and the potential challenges and solutions associated with this technology.

Detecting RAM Cached Drives

A. Identifying RAM Caching in Storage Devices

To harness the benefits of RAM caching effectively, it’s essential first to identify whether a storage device is configured with RAM caching. This detection process involves looking for specific indications, which may include:

Product Documentation: Check the documentation accompanying the storage device, as manufacturers often provide information about RAM caching features and configurations.

Operating System Reports: Some operating systems, such as Windows and Linux, provide information about storage configurations in their system reports or device management tools. Look for any mentions of caching or acceleration.

Storage Device Specifications: Review the specifications of the storage device itself. Manufacturers often mention if RAM caching is a built-in feature.

B. Diagnostic Tools and Techniques

Detecting RAM cached drives may require more advanced diagnostic tools and techniques:

Third-Party Software: Utilize third-party software designed to analyse and report on storage configurations. These tools can provide detailed information about the presence and operation of RAM caching.

BIOS/UEFI Settings: Access the system’s BIOS/UEFI settings, where you may find options related to RAM caching or storage acceleration. Ensure that these settings are properly configured.

Operating System Utilities: Some operating systems have built-in utilities for managing and monitoring storage configurations. These utilities can reveal whether RAM caching is active and how it is configured.

C. Benefits of Recognizing RAM Cached Drives

Recognising the presence of RAM-cached drives in your system offers several advantages:

Informed Troubleshooting: Knowing that your system employs RAM caching allows you to troubleshoot performance issues more effectively. If caching is not functioning correctly, you can take steps to diagnose and rectify the problem.

Optimising System Configuration: With awareness of RAM caching, you can fine-tune your system’s configuration for maximum performance. This includes adjusting cache size, cache policies, and other relevant settings.

Exploiting Caching Advantages: Understanding RAM caching enables you to leverage its benefits intentionally. You can prioritise frequently used applications and data to ensure they are cached, improving overall performance.

Detecting RAM-cached drives is the first step towards harnessing the advantages of this technology. In the subsequent sections of this article, we will explore the real-world impact of RAM caching on system performance, discuss potential challenges and limitations, and provide insights into making informed decisions about storage solutions that incorporate RAM caching.

The Impact on System Performance

A. How RAM Caching Influences System Performance

RAM caching profoundly impacts system performance by significantly enhancing data access speeds and responsiveness. Here’s how it influences performance:

Speeding Up Data Access: RAM caching reduces the time it takes to access frequently used data. When you request a file or application, the system retrieves it from the high-speed RAM cache rather than the slower primary storage drive, resulting in near-instantaneous access.

Reducing Latency: RAM caching minimises the latency associated with data retrieval. Latency, the delay between making a request and receiving data, is substantially reduced, leading to a more responsive computing experience.

Enhancing Multitasking Capabilities: With data readily available in the RAM cache, your system can handle multiple tasks simultaneously without a significant performance drop. This is especially beneficial for tasks that involve frequent data access, such as video editing, gaming, and database operations.

B. Balancing RAM Caching with Other Storage Technologies

While RAM caching offers substantial performance benefits, it’s essential to strike a balance when integrating it with other storage technologies:

SSDs vs. HDDs vs. RAM Caching: SSDs provide excellent performance and reliability compared to traditional HDDs. When using RAM caching in conjunction with SSDs, the already impressive speed of SSDs is further amplified. However, balancing the investment between an SSD and RAM caching depends on the specific use case and budget considerations.

Hybrid Storage Solutions: Some systems combine SSDs or HDDs alongside RAM caching for a hybrid storage setup. This approach aims to provide both capacity and speed benefits. Careful consideration of tiering data between the different storage components is crucial for optimising performance.

Energy Consumption: RAM caching can increase power consumption due to the need to keep data in RAM. Balancing performance gains with energy efficiency is essential, especially for mobile devices and data centres where power efficiency is a concern.

C. Real-World Examples of Improved Performance

To better understand the tangible benefits of RAM caching, consider these real-world scenarios:

Gaming PCs: Gamers experience reduced loading times, faster texture rendering, and smoother gameplay. RAM caching ensures that frequently accessed game assets are readily available, leading to an immersive gaming experience.

Business Servers: RAM caching accelerates data access for critical applications and databases in enterprise environments. This results in faster response times, increased user productivity, and reduced downtime.

Data Centers: Data centres benefit from RAM caching by delivering low-latency access to frequently used data and improving the performance of virtualised servers. This translates to more efficient data processing and reduced infrastructure costs.

Understanding how RAM caching can transform real-world performance scenarios highlights its relevance and impact in diverse computing environments. In the subsequent sections, we will delve into potential challenges and limitations associated with RAM-cached drives and provide insights into making informed decisions when adopting this technology.

Challenges and Limitations

A. Potential Drawbacks of RAM Caching

While RAM caching offers remarkable performance enhancements, it comes with certain potential drawbacks:

Limited by Available RAM: The effectiveness of RAM caching is constrained by the amount of RAM available in your system. If your RAM cache is too small to accommodate frequently used data, it may result in frequent cache evictions and reduced performance gains.

Risk of Data Loss: Data stored in the RAM cache is volatile, meaning it can be lost if the system loses power or encounters a crash before data is written to the primary storage device. This risk can be mitigated with appropriate backup and recovery strategies.

Compatibility Concerns: Not all hardware and software configurations support RAM caching. Compatibility issues can arise if your system lacks the necessary components or drivers for RAM caching.

B. Compatibility Issues and Hardware Requirements

Ensuring compatibility and meeting hardware requirements is essential when implementing RAM caching:

Motherboard Support: Check if your motherboard and chipset support RAM caching features. Some motherboards have dedicated slots for caching modules, while others may not support this technology.

RAM Types and Speeds: Verify that your RAM modules are compatible with RAM caching. Different RAM types (e.g., DDR3, DDR4) and speeds may have varying levels of compatibility and performance.

Cache Management Software: Some RAM cached drives require specific cache management software or drivers. Ensure that your operating system supports this software and it is correctly configured.

C. Mitigating Challenges for Optimal Performance

To optimise RAM caching and mitigate its challenges, consider the following strategies:

Choosing the Right Cache Size: Determine an appropriate size based on your usage patterns and available RAM. A larger cache can accommodate more data but may require more RAM.

Backup and Data Recovery Strategies: Implement regular data backups to protect against data loss during a power failure or system crash. Utilise uninterruptible power supplies (UPS) to prevent unexpected shutdowns.

Keeping Cache Updated: Configure your cache management software to ensure that cached data is regularly updated to reflect changes made to the primary storage drive. This prevents the cache from becoming stale.

By understanding and addressing these challenges and limitations, you can optimise the performance and reliability of RAM-cached drives in your system. In the subsequent sections, we will guide you through making informed decisions when selecting storage solutions, assessing your specific needs, and weighing the pros and cons of RAM-cached drives against other options.

Making Informed Decisions

A. Considerations When Choosing Storage Solutions

When selecting storage solutions, particularly those involving RAM caching, it’s essential to consider a range of factors:

Budget Constraints: Determine your budget for storage solutions. RAM cached drives may come at a premium compared to traditional drives, so aligning your budget with your needs is essential.

Specific Use Cases: Identify your primary use cases. Whether it’s gaming, content creation, server operations, or general productivity, different use cases may have varying requirements for storage performance.

Future Scalability: Consider your future needs for storage capacity and performance. Ensure the selected storage solution accommodates your data growth and evolving requirements.

B. Assessing Your Specific Needs

To make informed decisions, conduct a thorough assessment of your specific needs:

Analysing Workloads: Understand the nature of your computing workloads. Are they read-heavy, write-heavy, or a mix of both? Different workloads may benefit from RAM caching differently.

Identifying Critical Applications: Determine which applications or processes are critical to your workflow. RAM caching can significantly improve the performance of these key components.

Estimating Data Growth: Predict your data growth over time. Ensure that your storage solution, including RAM caching, can accommodate this growth without compromising performance.

Future-Proofing: Consider the longevity of your investment. Choose a storage solution that can adapt to future technologies and trends, ensuring it remains relevant and efficient.

C. Weighing the Pros and Cons of RAM Cached Drives

To make an informed decision regarding RAM cached drives, evaluate their pros and cons:

Pros:

Enhanced Performance: RAM caching significantly boosts data access speeds and system responsiveness.

Efficient Data Handling: Frequently used data is readily available in the cache, reducing the need to access the primary storage drive.

Extended Storage Drive Lifespan: RAM caching minimises wear and tear on the primary storage drive, potentially extending its longevity.

Cons:

Cost: RAM cached drives can be more expensive than traditional drives, especially when considering the additional cost of RAM.

Data Volatility: Data stored in the RAM cache can be lost during a system crash or power failure.

Compatibility Requirements: RAM caching may require specific hardware components; not all systems or motherboards support this technology.

By carefully considering these factors and weighing the advantages and disadvantages, you can make an informed decision regarding the adoption of RAM cached drives. Whether you prioritise performance, budget, or data reliability, understanding your unique needs is key to selecting the right storage solution for your computing environment.

Case Studies

A. Real-World Examples of Organizations Benefiting from RAM-Cached Drives

To illustrate the tangible benefits of RAM cached drives, let’s explore real-world examples of organisations and scenarios where this technology has made a significant impact:

E-commerce Websites: Online retailers rely on rapid database access and quick page loading times to enhance the user shopping experience. By implementing RAM cached drives, e-commerce websites can significantly reduce page load times, increasing customer satisfaction and sales.

Content Delivery Networks (CDNs): CDNs are critical in delivering web content quickly and efficiently to users worldwide. RAM caching helps CDNs serve frequently requested content from high-speed RAM storage, reducing latency and improving content delivery speed.

Financial Institutions: Banks and financial institutions depend on near-instantaneous access to transaction data and customer information. RAM-cached drives accelerate data retrieval for banking applications, enabling faster transactions and improved customer service.

Gaming Studios: Video game development studios benefit from RAM caching during the game development. Rapid asset loading and real-time level editing are made possible, streamlining the game creation pipeline and reducing development time.

B. Performance Improvements and Cost-Effectiveness

RAM cached drives have consistently delivered notable performance improvements and cost-effectiveness in various scenarios:

Performance Metrics: Organizations implementing RAM caching often report substantial increases in performance metrics such as throughput, IOPS, and reduced latency. These improvements translate to faster data processing, quicker application launches, and smoother user experiences.

Reduced Downtime: RAM caching can significantly reduce downtime in critical applications and servers. For businesses, this translates to improved productivity, minimised revenue loss, and enhanced customer satisfaction.

Cost Savings: While the initial investment in RAM cached drives and additional RAM may seem significant, the long-term cost savings are often substantial. Reduced wear and tear on primary storage drives can extend their lifespan, reducing the need for frequent replacements.

Scalability: RAM caching is scalable, allowing organisations to adjust the cache size as their needs evolve. This flexibility ensures that performance improvements remain consistent even as data volumes grow.

These case studies and examples demonstrate that RAM cached drives can be a strategic investment for organisations seeking to optimise their storage infrastructure, improve performance, and maintain cost-effectiveness. As technology evolves, RAM caching will likely play an increasingly vital role in various industries, enabling them to stay competitive in an ever-demanding digital landscape.

Future Trends and Innovations

A. The Evolving Landscape of Storage Technology

The field of storage technology is continually evolving, driven by the need for faster, more reliable, and efficient data management. Several notable trends are shaping the future of storage technology:

Persistent Memory: The emergence of persistent memory technologies, such as Intel’s Optane, offers a bridge between RAM and traditional storage devices. These technologies promise faster data access and can replace or complement RAM caching in specific applications.

Storage-Class Memory (SCM): SCM, which combines the speed of RAM with the non-volatility of storage devices, is gaining traction. SCM is expected to play a significant role in high-performance computing and data centres.

Data Center Innovations: Data centres are evolving to meet the demands of cloud computing, AI, and big data. Storage technologies are designed for scalability, energy efficiency, and rapid data access, emphasising reducing latency.

Software-Defined Storage (SDS): SDS is on the rise, allowing organisations to manage and allocate storage resources dynamically through software rather than hardware. This approach offers greater flexibility and cost savings.

B. Predictions for RAM Cached Drives

RAM cached drives are likely to continue evolving in response to changing technology and user demands:

Wider Adoption: As the technology becomes more mainstream, RAM cached drives are expected to see wider adoption across consumer, enterprise, and data centre environments.

Integration with AI and Analytics: RAM caching could become an integral part of AI and analytics platforms, where rapid data access is crucial for processing large datasets in real time.

Enhanced Cache Management: Cache management algorithms will become more sophisticated, optimising data placement for even more significant performance gains.

Diverse Form Factors: RAM caching may be integrated into various form factors, including M.2 drives, DIMM-based solutions, and even cloud-based storage services.

C. Other Emerging Storage Solutions

In addition to RAM-cached drives, several other emerging storage solutions are worth noting:

Non-Volatile Memory Express (NVMe): NVMe SSDs are becoming increasingly prevalent due to their high-speed data access. These drives are poised to replace traditional SATA SSDs as the standard for storage.

Storage Virtualization: Storage virtualisation technologies are gaining popularity, allowing organisations to pool and manage storage resources more efficiently across their infrastructure.

Hyperconverged Infrastructure (HCI): HCI solutions, which combine computing, storage, and networking in a single integrated system, are becoming the foundation for modern data centres, simplifying storage management.

Quantum Storage: In quantum computing, entirely new storage paradigms are emerging, offering the potential for ultra-fast, high-capacity data storage and retrieval.

The future of storage technology is dynamic and promises to bring innovations that will reshape how we store, access, and manage data. As these technologies mature and become more accessible, users and organisations will have greater options for tailoring their storage solutions to meet their specific needs and performance requirements.

Conclusion

Understanding the realm of RAM cached drives and relative performance is like having a compass in the ever-evolving world of storage technology. RAM caching’s fusion of speed and capacity has redefined storage, but knowing how it compares to traditional options is key to unlocking its true potential.

Informed decisions are the cornerstone of optimising storage solutions. Whether you’re a gamer seeking quicker load times or a business aiming to reduce downtime, comprehending relative performance ensures that RAM-cached drives align with your unique needs and priorities.

The future of storage technology is dynamic, with RAM caching set to play a pivotal role. Its integration with emerging technologies promises even more significant speed and flexibility, ushering in a new era of data-driven innovation. So, embrace the power of understanding, and let it guide you through the exciting landscape of storage solutions and beyond.